Artificial intelligence (AI) is unleashing powerful disruptive forces across the global economy. While its applications could unlock transformational efficiency gains and innovation, it also threatens to rapidly erode historic competitive advantages.

Nearly three-quarters of S&P 500 companies now flag at least one material risk related to AI in their public disclosures – up from just 12% in 2023.1 Yet how many are well prepared to manage them?

In their rush to adopt AI, companies must not overlook the risks arising from product liabilities, algorithmic biases and cybersecurity breaches, whether they be through their own development or adoption of AI or via the indirect disruption that AI will have on their business model.

It is our conviction that sound governance of AI risk leads to more effective decision-making that reduces risks and improves trust among stakeholders. In doing so, it should help enhance shareholder value from adoption of AI. Based on engagements with our investee companies in 2025, we share what we believe to be good practice.

Company risks amplified by AI

We have used real-world evidence and our proprietary sustainability frameworks to identify five types of risk amplified by AI, each of which could incur significant financial costs for companies (and society) if mismanaged. Sound management of each could translate into opportunities, however.

First, product liabilities. Companies that develop AI models or integrate them into operations and product development face heightened legal and reputational risks when these models do not ensure the safety of end users or unfairly discriminate against them. Legal claims are already being made against algorithmic bias in recruitment: a hiring discrimination class-action lawsuit has been brought against US enterprise software company Workday by a candidate who alleges that more than 100 job applications with companies using Workday’s AI-based hiring tools were rejected due to unlawful screening.2

Second, digital disruption. Within the software space, there is rising awareness of the potential competitive risks posed directly by AI. Shares in data and information services companies sold off sharply in early February 2026 on fears that Anthropic’s Claude Cowork tool could enable automation within financial, legal and accounting workflows.3 AI can also enhance cybersecurity by simulating and predicting evolving attack patterns.4 Its misuse can, however, heighten cybersecurity risks. According to IBM, 20% of organisations have suffered a breach involving ‘shadow AI’ (where unauthorised AI tools are adopted by employees, bypassing corporate IT security): most did not have an AI governance policy in place.5

Third, labour market disruption. If deployed effectively, AI can reduce labour-related costs and improve company margins. However, attention ought to be paid to the unintended negative consequences that rapid workforce restructuring – and of unstructured adoption of AI – can have on corporate culture and intangible drivers of company value. AI also risks undermining the value propositions of businesses, such as consultancies, which are built on monetising access to specialist expertise.

Fourth,resource intensity and climate change. The energy and water intensity of training AI models – and their use – creates bottlenecks that could slow down their adoption. It also poses reputational and financial risks to companies in the upstream AI supply chain.6 The proliferation of AI-focused data centres – each of which typically consumes as much electricity as 100,000 homes – places pressure on local electricity grids and water resources.7 Public opposition had blocked or delayed US data centre projects worth US$162bn, as of June 2025 – with US$98bn in Q2 2025 alone.8

Fifth, supply chain dependencies. The rising strategic importance of AI, compounded by its technical complexities and critical supply chain nodes, implies that companies in the AI supply chain are vulnerable to rising geopolitical tensions over access to AI-related infrastructure. While US-China tensions have temporarily abated, we expect these risks to continue to create uncertainty in months and years to come.

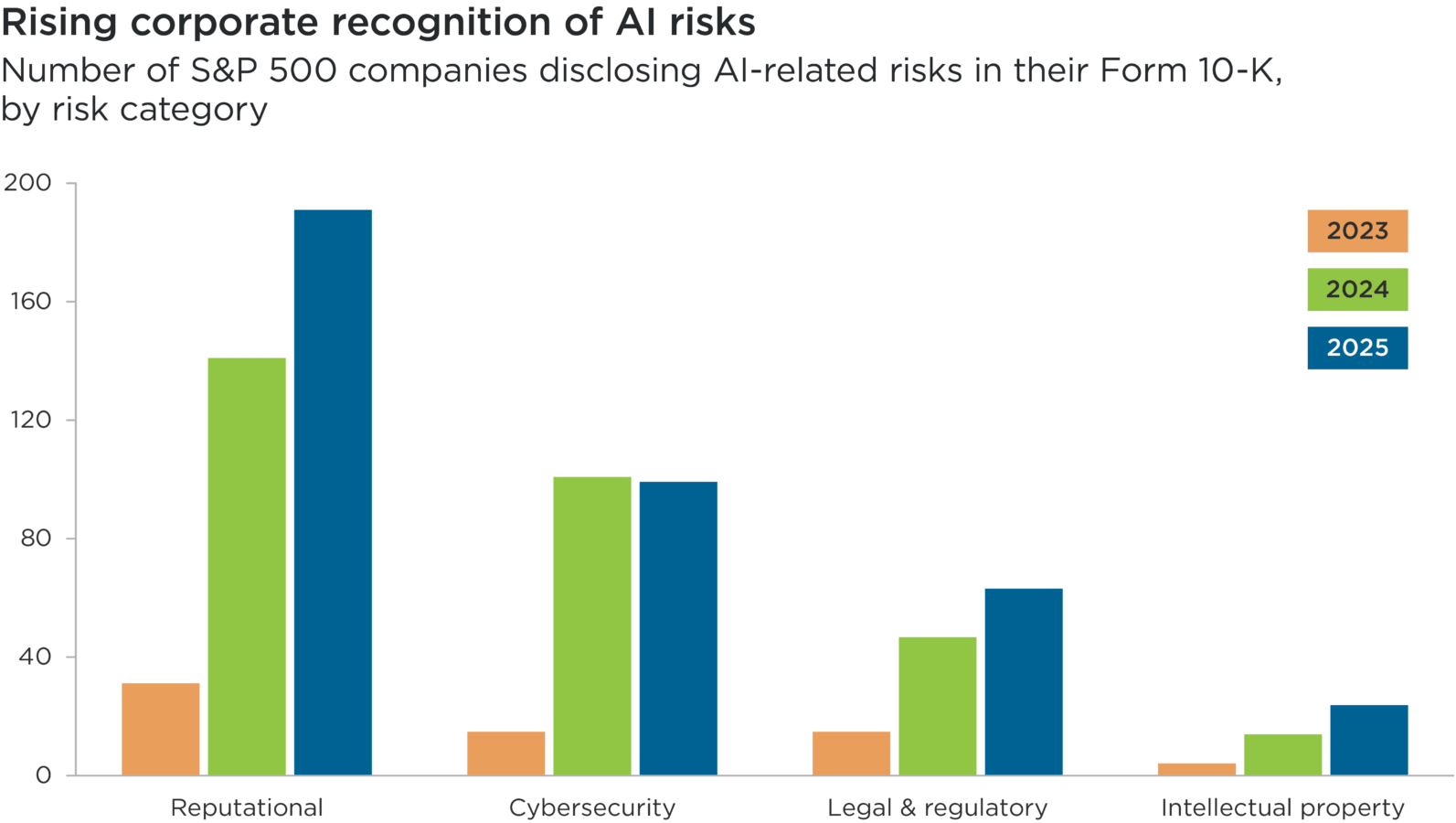

Source: The Conference Board / ESGUAGE, 2025.

Note that some companies disclose more than one AI risk. 2025 data is current as at 15 August 2025.

Subhead: Number of S&P 500 companies disclosing AI-related risks in their Form 10-K, by risk category

Overview: This bar chart shows the number of the largest 500 listed US companies (as comprising the S&P 500 Index) that formally disclosed specific types of AI-related risks in their reporting in 2023, 2024 and 2025. Four types of AI risks are included: reputational; cybersecurity; legal & regulatory; and intellectual property.

Overall, this chart illustrates the sharp upward trend in disclosure of AI-related risks by large US companies between 2023 and 2025. Reputational risk is the most widely reported, followed by cybersecurity.

What does good AI governance look like?

From a systematic scan of 163 GICs sub-industries, we have derived a shortlist of fewer than 30 sub-industries where AI poses highly material financial risks – and / or presents opportunities – and where Impax portfolios have exposure.

Our first phase of company engagement has prioritised sub-industries with exposure to product liability risks and algorithmic bias. These include software (both application and systems), recruitment, research and consulting, construction, telecoms and healthcare equipment companies.

This engagement has highlighted wide disparities in companies’ approaches to AI risk management. It has also underscored the importance of the following five aspects of AI governance – and identified examples of good practice for each.

- Robust AI governance structures

The best governance processes are dynamic and proportionate to company-specific risks. They should also be responsive to the competitive environment and potential disruption a company is likely to face due to adoption of AI in the wider economy.

At the board level, AI governance is commonly overseen by the audit/risk committee with individual board members often designated as leads. At the executive level, we see cross-functional executive AI committees as best practice, including oversight of AI’s use within products and user experiences. US product lifecycle management software company PTC, for example, has established a multi-tiered AI governance framework incorporating a cross-functional AI ‘Action Committee’. This committee – which reports to a strategic AI steering committee – has a remit to develop AI policies, evaluate AI use cases and develop AI training programmes.

- Training and expertise

Almost all companies we engaged with have some form of AI-related training at board and executive leadership levels. Some have established training across their workforces, including role-specific training and, in some cases, using ‘AI champions’ to promote training and awareness of company-wide AI applications.

Leading practices demonstrate extensive investment in AI literacy and proactively foster an AI-aware culture. Dutch telecoms group KPN, for example, has run dedicated sessions for its top 250 leaders to elevate AI awareness and to equip them to effectively manage AI-related risks and opportunities.

- Identifying, assessing and prioritising AI use cases

Oversight and management of AI risks need to be proportionate and relevant to specific AI use cases.

US workflow management software company ServiceNow’s dedicated AI steering committee serves as a good example. It provides cross-functional governance of AI development and is responsible for AI policy. The committee also reviews individual use cases where an advisory group has determined that they are sufficiently ‘high risk’. Lower risk use-cases are not escalated but are dealt with by the company’s ‘AI control tower’.

- Clear AI policy/principles

Our engagements uncovered examples of robust implementation of AI policies and principles across four specific areas of risk, in particular.

First, data security. US infrastructure consultant AECOM creates ‘synthetic data’ to train proprietary models within a secure internal development environment, with all outputs reviewed by engineers.

Second, bias discrimination. At Recruit, which owns online job platform Indeed, product development teams test and monitor AI models before and after launch to check for biases. The Japan-listed company also undertakes internal audits on hiring recommendations to check models are working as anticipated.

Third, accountability. There is a common emphasis on keeping humans ‘in the loop’. While there are efforts towards AI adoption within healthcare, US-listed robotic systems maker Intuitive Surgical has focused on using AI to reduce surgeons’ cognitive load during surgery, not on replacing them.

Fourth, transparency. US-listed design software company Autodesk has published whitepapers and established AI product transparency cards which disclose product functionality, data sources and safeguards to customers.

- Impact assessment

We found that few companies have published clear frameworks for monitoring and consistently measuring the returns on investment of AI use, whether in their operations or product development. This makes it hard to ensure that returns are increasing over time and that company strategies are resilient to economy-wide AI disruption.

Driving shareholder value from AI

As we expand our engagement to other sub-sectors, we will articulate ‘key asks’ of companies concerning their AI governance and risk management processes, policies and training. To manage risks, and so drive shareholder value from AI, we expect:

- Robust AI governance processes, including clear board oversight, use of a cross-functional oversight committee to review AI use and a protocol for identifying and escalating high-risk use cases

- Transparent use of AI, including disclosure of company AI policy and principles, including how they are applied and integrated into company strategy

- Firm-wide training and education, with training tailored to roles and responsibilities and board-level AI training as a minimum requirement

We will continue to focus our dialogues on how companies are measuring returns on their investment in AI within operations and product development, and how they are preparing for shocks to their competitive edge from economy-wide AI adoption. Additionally, we will work with companies on how biases in AI systems can be mitigated through a combination of data auditing and governance, diverse teams, retaining human oversight and regular bias detection. We will also seek to deepen engagement on the extent to which a company’s culture enables AI readiness and its efforts to ensure workforce culture is resilient amidst AI-driven disruption.

In a landscape where AI disruption is outpacing preparation, these engagements are not only timely but imperative to understand and manage risks across investor portfolios.

1 The Conference Board, October 2025: New Study: 7 in 10 Big US Companies Report AI Risks in Public Disclosures

2 Callaham, S., 13 January 2026: Applied For A Job Through Workday? Court-Authorized Opt-In Is Now Open. Forbes

3 Steer, G., Thomas, D. & Stafford, P., 3 February 2026: US stocks drop on fears AI will hit software and analytics groups. Financial Times

4 IBM, December 2025: Security automation: Accelerating your cybersecurity with AI

5 IBM, 2025: Cost of a Data Breach Report 2025

6 A 100MW hyperscale data centre can directly consume around 2.5bn litres of water each year -equivalent to the needs of about 80,000 people. Impax analysis, based on figures from World Economic Forum, November 2024: Why circular water solutions are key to sustainable data centres

7 IEA, April 2025: Energy and AI

8 Data Center Watch, July 2025

References to specific securities are for illustrative purposes only and should not be considered as a recommendation to buy or sell. Nothing presented herein is intended to constitute investment advice and no investment decision should be made solely based on this information. Nothing presented should be construed as a recommendation to purchase or sell a particular type of security or follow any investment technique or strategy. Information presented herein reflects Impax Asset Management’s views at a particular time. Such views are subject to change at any point and Impax Asset Management shall not be obligated to provide any notice. Any forward-looking statements or forecasts are based on assumptions and actual results are expected to vary. While Impax Asset Management has used reasonable efforts to obtain information from reliable sources, we make no representations or warranties as to the accuracy, reliability or completeness of third-party information presented herein. No guarantee of investment performance is being provided and no inference to the contrary should be made.

United States

United States